阅读(982)

赞(9)

OpenCV模板匹配

2017-09-21 11:31:31 更新

目标

在本教程中,您将学习如何:

- 使用OpenCV功能matchTemplate()来搜索图像补丁和输入图像之间的匹配

- 使用OpenCV函数minMaxLoc()来查找给定数组中的最大值和最小值(以及它们的位置)。

理论

什么是模板匹配?

模板匹配是一种用于查找与模板图像(补丁)匹配(类似)的图像区域的技术。

虽然补丁必须是一个矩形,可能并不是所有的矩形都是相关的。在这种情况下,可以使用掩模来隔离应该用于找到匹配的补丁部分。

它是如何工作的?

- 我们需要两个主要组件:

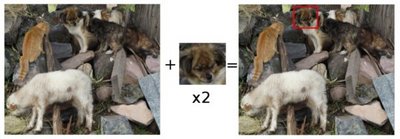

- 源图像(I):我们期望找到与模板图像匹配的图像

- 模板图像(T):将与模板图像进行比较的补丁图像

我们的目标是检测最匹配的区域:

- 要识别匹配区域,我们必须通过滑动来比较模板图像与源图像:

- 通过滑动,我们的意思是一次移动补丁一个像素(从左到右,从上到下)。在每个位置,计算度量,以便它表示在该位置处的匹配的“好”还是“坏”(或者与图像的特定区域相似)。

对于T的每个位置超过I,则存储在该度量结果矩阵 R 。R中的每个位置(x,y)都包含匹配度量

上面的图片是一个度量tm_ccorr_normed滑动补丁结果R。最亮的位置表示最高匹配。如您所见,红色圆圈标记的位置可能是具有最高值的位置,因此这个位置(由点形成的矩形,角度和宽度和高度等于补丁图像)被认为是匹配。

- 实际上,我们使用函数minMaxLoc()定位R矩阵中最高的值(或更低的取决于匹配方法的类型)

mask是如何工作的?

- 如果匹配需要屏蔽,则需要三个组件:

- 源图像(I):我们期望找到与模板图像匹配的图像

- 模板图像(T):将与模板图像进行比较的补丁图像

- 掩模图像(M):The mask,屏蔽模板的灰度图像

- 目前只有两种匹配方法接受掩码:CV_TM_SQDIFF和CV_TM_CCORR_NORMED(有关opencv中可用的所有匹配方法的说明,请参见下文)。

- The mask必须与模板尺寸相同

- The mask应具有CV_8U或CV_32F深度和与模板图像相同数量的通道。在CV_8U情况下,The mask值被视为二进制,即零和非零。在CV_32F情况下,值应该落在[0..1]范围内,并且模板像素将乘以相应的The mask像素值。由于样本中的输入图像具有CV_8UC3类型,因此屏蔽也被读取为彩色图像。

OpenCV中可以使用哪些匹配方法?

OpenCV在函数matchTemplate()中实现模板匹配。可用的方法有以上6种:

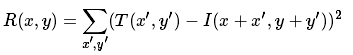

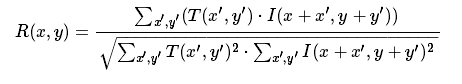

- method=CV_TM_SQDIFF

- method=CV_TM_SQDIFF_NORMED

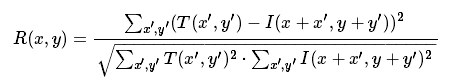

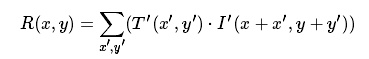

- method=CV_TM_CCORR

- method=CV_TM_CCORR_NORMED

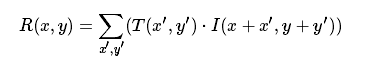

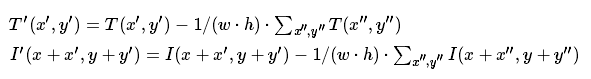

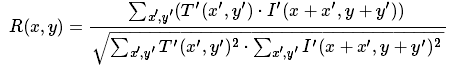

- method=CV_TM_CCOEFF

where

- method=CV_TM_CCOEFF_NORMED

Code

C ++

这个程序是做什么的?

- 加载输入图像,图像补丁(模板)和可选的mask

- 使用OpenCV函数matchTemplate()与之前描述的6种匹配方法中的任何一种执行模板匹配过程。用户可以通过在轨迹栏中输入其选择来选择该方法。如果提供了一个mask,它只会用于支持mask的方法

- 规范匹配过程的输出

- 以较高的匹配概率来定位位置

- 在与最高匹配相对应的区域周围绘制一个矩形

- 可下载的代码:点击这里

- 代码一览:

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/imgproc.hpp"

#include <iostream>

using namespace std;

using namespace cv;

bool use_mask;

Mat img; Mat templ; Mat mask; Mat result;

const char* image_window = "Source Image";

const char* result_window = "Result window";

int match_method;

int max_Trackbar = 5;

void MatchingMethod( int, void* );

int main( int argc, char** argv )

{

if (argc < 3)

{

cout << "Not enough parameters" << endl;

cout << "Usage:\n./MatchTemplate_Demo <image_name> <template_name> [<mask_name>]" << endl;

return -1;

}

img = imread( argv[1], IMREAD_COLOR );

templ = imread( argv[2], IMREAD_COLOR );

if(argc > 3) {

use_mask = true;

mask = imread( argv[3], IMREAD_COLOR );

}

if(img.empty() || templ.empty() || (use_mask && mask.empty()))

{

cout << "Can't read one of the images" << endl;

return -1;

}

namedWindow( image_window, WINDOW_AUTOSIZE );

namedWindow( result_window, WINDOW_AUTOSIZE );

const char* trackbar_label = "Method: \n 0: SQDIFF \n 1: SQDIFF NORMED \n 2: TM CCORR \n 3: TM CCORR NORMED \n 4: TM COEFF \n 5: TM COEFF NORMED";

createTrackbar( trackbar_label, image_window, &match_method, max_Trackbar, MatchingMethod );

MatchingMethod( 0, 0 );

waitKey(0);

return 0;

}

void MatchingMethod( int, void* )

{

Mat img_display;

img.copyTo( img_display );

int result_cols = img.cols - templ.cols + 1;

int result_rows = img.rows - templ.rows + 1;

result.create( result_rows, result_cols, CV_32FC1 );

bool method_accepts_mask = (CV_TM_SQDIFF == match_method || match_method == CV_TM_CCORR_NORMED);

if (use_mask && method_accepts_mask)

{ matchTemplate( img, templ, result, match_method, mask); }

else

{ matchTemplate( img, templ, result, match_method); }

normalize( result, result, 0, 1, NORM_MINMAX, -1, Mat() );

double minVal; double maxVal; Point minLoc; Point maxLoc;

Point matchLoc;

minMaxLoc( result, &minVal, &maxVal, &minLoc, &maxLoc, Mat() );

if( match_method == TM_SQDIFF || match_method == TM_SQDIFF_NORMED )

{ matchLoc = minLoc; }

else

{ matchLoc = maxLoc; }

rectangle( img_display, matchLoc, Point( matchLoc.x + templ.cols , matchLoc.y + templ.rows ), Scalar::all(0), 2, 8, 0 );

rectangle( result, matchLoc, Point( matchLoc.x + templ.cols , matchLoc.y + templ.rows ), Scalar::all(0), 2, 8, 0 );

imshow( image_window, img_display );

imshow( result_window, result );

return;

}代码说明

- 声明一些全局变量,如图像,模板和结果矩阵,以及匹配方法和窗口名称:

bool use_mask;

Mat img; Mat templ; Mat mask; Mat result;

const char* image_window = "Source Image";

const char* result_window = "Result window";

int match_method;

int max_Trackbar = 5;- 加载源图像,模板,可选地,如果匹配方法支持,则使用mask:

img = imread( argv[1], IMREAD_COLOR );

templ = imread( argv[2], IMREAD_COLOR );

if(argc > 3) {

use_mask = true;

mask = imread( argv[3], IMREAD_COLOR );

}

if(img.empty() || templ.empty() || (use_mask && mask.empty()))

{

cout << "Can't read one of the images" << endl;

return -1;

}- 创建跟踪栏以输入要使用的匹配方法。当检测到更改时,调用回调函数。

const char* trackbar_label = "Method: \n 0: SQDIFF \n 1: SQDIFF NORMED \n 2: TM CCORR \n 3: TM CCORR NORMED \n 4: TM COEFF \n 5: TM COEFF NORMED";

createTrackbar( trackbar_label, image_window, &match_method, max_Trackbar, MatchingMethod );- 我们来看看回调函数。首先,它创建源图像的副本:

Mat img_display;

img.copyTo( img_display );- 执行模板匹配操作。的参数是自然输入图像我,模板T,结果ř和match_method(由给定的TrackBar),和任选的掩模图像中号。

bool method_accepts_mask = (CV_TM_SQDIFF == match_method || match_method == CV_TM_CCORR_NORMED);

if (use_mask && method_accepts_mask)

{ matchTemplate( img, templ, result, match_method, mask); }

else

{ matchTemplate( img, templ, result, match_method); }- 我们对结果进行归一化:

normalize( result, result, 0, 1, NORM_MINMAX, -1, Mat() );

- 我们使用minMaxLoc()定位结果矩阵R中的最小值和最大值。

double minVal; double maxVal; Point minLoc; Point maxLoc;

Point matchLoc;

minMaxLoc( result, &minVal, &maxVal, &minLoc, &maxLoc, Mat() )- 对于前两种方法(TM_SQDIFF和MT_SQDIFF_NORMED),最佳匹配是最低值。对于所有其他的,更高的值表示更好的匹配。所以我们把对应的值保存在matchLoc变量中:

if( match_method == TM_SQDIFF || match_method == TM_SQDIFF_NORMED )

{ matchLoc = minLoc; }

else

{ matchLoc = maxLoc; }- 显示源图像和结果矩阵。在最高可能的匹配区域周围绘制一个矩形:

rectangle( img_display, matchLoc, Point( matchLoc.x + templ.cols , matchLoc.y + templ.rows ), Scalar::all(0), 2, 8, 0 );

rectangle( result, matchLoc, Point( matchLoc.x + templ.cols , matchLoc.y + templ.rows ), Scalar::all(0), 2, 8, 0 );

imshow( image_window, img_display );

imshow( result_window, result );Java代码一览

import org.opencv.core.*;

import org.opencv.core.Point;

import org.opencv.imgcodecs.Imgcodecs;

import org.opencv.imgproc.Imgproc;

import javax.swing.*;

import javax.swing.event.ChangeEvent;

import javax.swing.event.ChangeListener;

import java.awt.*;

import java.awt.image.BufferedImage;

import java.awt.image.DataBufferByte;

import java.util.*;

class MatchTemplateDemoRun implements ChangeListener{

Boolean use_mask = false;

Mat img = new Mat(), templ = new Mat();

Mat mask = new Mat();

int match_method;

JLabel imgDisplay = new JLabel(), resultDisplay = new JLabel();

public void run(String[] args) {

if (args.length < 2)

{

System.out.println("Not enough parameters");

System.out.println("Program arguments:\n<image_name> <template_name> [<mask_name>]");

System.exit(-1);

}

img = Imgcodecs.imread( args[0], Imgcodecs.IMREAD_COLOR );

templ = Imgcodecs.imread( args[1], Imgcodecs.IMREAD_COLOR );

if(args.length > 2) {

use_mask = true;

mask = Imgcodecs.imread( args[2], Imgcodecs.IMREAD_COLOR );

}

if(img.empty() || templ.empty() || (use_mask && mask.empty()))

{

System.out.println("Can't read one of the images");

System.exit(-1);

}

matchingMethod();

createJFrame();

}

private void matchingMethod() {

Mat result = new Mat();

Mat img_display = new Mat();

img.copyTo( img_display );

int result_cols = img.cols() - templ.cols() + 1;

int result_rows = img.rows() - templ.rows() + 1;

result.create( result_rows, result_cols, CvType.CV_32FC1 );

Boolean method_accepts_mask = (Imgproc.TM_SQDIFF == match_method ||

match_method == Imgproc.TM_CCORR_NORMED);

if (use_mask && method_accepts_mask)

{ Imgproc.matchTemplate( img, templ, result, match_method, mask); }

else

{ Imgproc.matchTemplate( img, templ, result, match_method); }

Core.normalize( result, result, 0, 1, Core.NORM_MINMAX, -1, new Mat() );

double minVal; double maxVal;

Point matchLoc;

Core.MinMaxLocResult mmr = Core.minMaxLoc( result );

// For all the other methods, the higher the better

if( match_method == Imgproc.TM_SQDIFF || match_method == Imgproc.TM_SQDIFF_NORMED )

{ matchLoc = mmr.minLoc; }

else

{ matchLoc = mmr.maxLoc; }

Imgproc.rectangle(img_display, matchLoc, new Point(matchLoc.x + templ.cols(),

matchLoc.y + templ.rows()), new Scalar(0, 0, 0), 2, 8, 0);

Imgproc.rectangle(result, matchLoc, new Point(matchLoc.x + templ.cols(),

matchLoc.y + templ.rows()), new Scalar(0, 0, 0), 2, 8, 0);

Image tmpImg = toBufferedImage(img_display);

ImageIcon icon = new ImageIcon(tmpImg);

imgDisplay.setIcon(icon);

result.convertTo(result, CvType.CV_8UC1, 255.0);

tmpImg = toBufferedImage(result);

icon = new ImageIcon(tmpImg);

resultDisplay.setIcon(icon);

}

public void stateChanged(ChangeEvent e) {

JSlider source = (JSlider) e.getSource();

if (!source.getValueIsAdjusting()) {

match_method = (int)source.getValue();

matchingMethod();

}

}

public Image toBufferedImage(Mat m) {

int type = BufferedImage.TYPE_BYTE_GRAY;

if ( m.channels() > 1 ) {

type = BufferedImage.TYPE_3BYTE_BGR;

}

int bufferSize = m.channels()*m.cols()*m.rows();

byte [] b = new byte[bufferSize];

m.get(0,0,b); // get all the pixels

BufferedImage image = new BufferedImage(m.cols(),m.rows(), type);

final byte[] targetPixels = ((DataBufferByte) image.getRaster().getDataBuffer()).getData();

System.arraycopy(b, 0, targetPixels, 0, b.length);

return image;

}

private void createJFrame() {

String title = "Source image; Control; Result image";

JFrame frame = new JFrame(title);

frame.setLayout(new GridLayout(2, 2));

frame.add(imgDisplay);

int min = 0, max = 5;

JSlider slider = new JSlider(JSlider.VERTICAL, min, max, match_method);

slider.setPaintTicks(true);

slider.setPaintLabels(true);

// Set the spacing for the minor tick mark

slider.setMinorTickSpacing(1);

// Customizing the labels

Hashtable labelTable = new Hashtable();

labelTable.put( new Integer( 0 ), new JLabel("0 - SQDIFF") );

labelTable.put( new Integer( 1 ), new JLabel("1 - SQDIFF NORMED") );

labelTable.put( new Integer( 2 ), new JLabel("2 - TM CCORR") );

labelTable.put( new Integer( 3 ), new JLabel("3 - TM CCORR NORMED") );

labelTable.put( new Integer( 4 ), new JLabel("4 - TM COEFF") );

labelTable.put( new Integer( 5 ), new JLabel("5 - TM COEFF NORMED : (Method)") );

slider.setLabelTable( labelTable );

slider.addChangeListener(this);

frame.add(slider);

frame.add(resultDisplay);

frame.setDefaultCloseOperation(JFrame.EXIT_ON_CLOSE);

frame.pack();

frame.setVisible(true);

}

}

public class MatchTemplateDemo

{

public static void main(String[] args) {

// load the native OpenCV library

System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

// run code

new MatchTemplateDemoRun().run(args);

}

}代码说明

代码说明

- 声明一些全局变量,如图像,模板和结果矩阵,以及匹配方法和窗口名称:

Boolean use_mask = false;

Mat img = new Mat(), templ = new Mat();

Mat mask = new Mat();

int match_method;

JLabel imgDisplay = new JLabel(), resultDisplay = new JLabel();- 加载源图像,模板,可选地,如果匹配方法支持,则使用mask:

img = Imgcodecs.imread( args[0], Imgcodecs.IMREAD_COLOR );

templ = Imgcodecs.imread( args[1], Imgcodecs.IMREAD_COLOR );- 创建跟踪栏以输入要使用的匹配方法。当检测到更改时,调用回调函数。

int min = 0, max = 5;

JSlider slider = new JSlider(JSlider.VERTICAL, min, max, match_method);- 我们来看看回调函数。首先,它创建源图像的副本:

Mat img_display = new Mat();

img.copyTo( img_display );- 执行模板匹配操作。的参数是自然输入图像我,模板T,结果ř和match_method(由给定的TrackBar),和任选的掩模图像中号。

Boolean method_accepts_mask = (Imgproc.TM_SQDIFF == match_method ||

match_method == Imgproc.TM_CCORR_NORMED);

if (use_mask && method_accepts_mask)

{ Imgproc.matchTemplate( img, templ, result, match_method, mask); }

else

{ Imgproc.matchTemplate( img, templ, result, match_method); }- 我们对结果进行归一化:

Core.normalize( result, result, 0, 1, Core.NORM_MINMAX, -1, new Mat() );

- 我们使用minMaxLoc()定位结果矩阵R中的最小值和最大值。

double minVal; double maxVal;

Point matchLoc;

Core.MinMaxLocResult mmr = Core.minMaxLoc( result );- 对于前两种方法(TM_SQDIFF和MT_SQDIFF_NORMED),最佳匹配是最低值。对于所有其他的,更高的值表示更好的匹配。所以我们把对应的值保存在matchLoc变量中:

// For all the other methods, the higher the better

if( match_method == Imgproc.TM_SQDIFF || match_method == Imgproc.TM_SQDIFF_NORMED )

{ matchLoc = mmr.minLoc; }

else

{ matchLoc = mmr.maxLoc; }- 显示源图像和结果矩阵。在最高可能的匹配区域周围绘制一个矩形:

Imgproc.rectangle(img_display, matchLoc, new Point(matchLoc.x + templ.cols(),

matchLoc.y + templ.rows()), new Scalar(0, 0, 0), 2, 8, 0);

Imgproc.rectangle(result, matchLoc, new Point(matchLoc.x + templ.cols(),

matchLoc.y + templ.rows()), new Scalar(0, 0, 0), 2, 8, 0);

Image tmpImg = toBufferedImage(img_display);

ImageIcon icon = new ImageIcon(tmpImg);

imgDisplay.setIcon(icon);

result.convertTo(result, CvType.CV_8UC1, 255.0);

tmpImg = toBufferedImage(result);

icon = new ImageIcon(tmpImg);

resultDisplay.setIcon(icon);Python代码一览

import sys

import cv2

use_mask = False

img = None

templ = None

mask = None

image_window = "Source Image"

result_window = "Result window"

match_method = 0

max_Trackbar = 5

def main(argv):

if (len(sys.argv) < 3):

print 'Not enough parameters'

print 'Usage:\nmatch_template_demo.py <image_name> <template_name> [<mask_name>]'

return -1

global img

global templ

img = cv2.imread(sys.argv[1], cv2.IMREAD_COLOR)

templ = cv2.imread(sys.argv[2], cv2.IMREAD_COLOR)

if (len(sys.argv) > 3):

global use_mask

use_mask = True

global mask

mask = cv2.imread( sys.argv[3], cv2.IMREAD_COLOR )

if ((img is None) or (templ is None) or (use_mask and (mask is None))):

print 'Can\'t read one of the images'

return -1

cv2.namedWindow( image_window, cv2.WINDOW_AUTOSIZE )

cv2.namedWindow( result_window, cv2.WINDOW_AUTOSIZE )

trackbar_label = 'Method: \n 0: SQDIFF \n 1: SQDIFF NORMED \n 2: TM CCORR \n 3: TM CCORR NORMED \n 4: TM COEFF \n 5: TM COEFF NORMED'

cv2.createTrackbar( trackbar_label, image_window, match_method, max_Trackbar, MatchingMethod )

MatchingMethod(match_method)

cv2.waitKey(0)

return 0

def MatchingMethod(param):

global match_method

match_method = param

img_display = img.copy()

method_accepts_mask = (cv2.TM_SQDIFF == match_method or match_method == cv2.TM_CCORR_NORMED)

if (use_mask and method_accepts_mask):

result = cv2.matchTemplate(img, templ, match_method, None, mask)

else:

result = cv2.matchTemplate(img, templ, match_method)

cv2.normalize( result, result, 0, 1, cv2.NORM_MINMAX, -1 )

_minVal, _maxVal, minLoc, maxLoc = cv2.minMaxLoc(result, None)

if (match_method == cv2.TM_SQDIFF or match_method == cv2.TM_SQDIFF_NORMED):

matchLoc = minLoc

else:

matchLoc = maxLoc

cv2.rectangle(img_display, matchLoc, (matchLoc[0] + templ.shape[0], matchLoc[1] + templ.shape[1]), (0,0,0), 2, 8, 0 )

cv2.rectangle(result, matchLoc, (matchLoc[0] + templ.shape[0], matchLoc[1] + templ.shape[1]), (0,0,0), 2, 8, 0 )

cv2.imshow(image_window, img_display)

cv2.imshow(result_window, result)

pass

if __name__ == "__main__":

main(sys.argv[1:])代码说明

- 声明一些全局变量,如图像,模板和结果矩阵,以及匹配方法和窗口名称:

use_mask = False

img = None

templ = None

mask = None

image_window = "Source Image"

result_window = "Result window"

match_method = 0

max_Trackbar = 5- 加载源图像,模板,可选地,如果匹配方法支持,则使用mask:

global img

global templ

img = cv2.imread(sys.argv[1], cv2.IMREAD_COLOR)

templ = cv2.imread(sys.argv[2], cv2.IMREAD_COLOR)

if (len(sys.argv) > 3):

global use_mask

use_mask = True

global mask

mask = cv2.imread( sys.argv[3], cv2.IMREAD_COLOR )

if ((img is None) or (templ is None) or (use_mask and (mask is None))):

print 'Can\'t read one of the images'

return -1- 创建跟踪栏以输入要使用的匹配方法。当检测到更改时,调用回调函数。

trackbar_label = 'Method: \n 0: SQDIFF \n 1: SQDIFF NORMED \n 2: TM CCORR \n 3: TM CCORR NORMED \n 4: TM COEFF \n 5: TM COEFF NORMED'

cv2.createTrackbar( trackbar_label, image_window, match_method, max_Trackbar, MatchingMethod )- 我们来看看回调函数。首先,它创建源图像的副本:

img_display = img.copy()- 执行模板匹配操作。的参数是自然输入图像我,模板T,结果ř和match_method(由给定的TrackBar),和任选的掩模图像中号。

method_accepts_mask = (cv2.TM_SQDIFF == match_method or match_method == cv2.TM_CCORR_NORMED)

if (use_mask and method_accepts_mask):

result = cv2.matchTemplate(img, templ, match_method, None, mask)

else:

result = cv2.matchTemplate(img, templ, match_method)- 我们对结果进行归一化:

cv2.normalize( result, result, 0, 1, cv2.NORM_MINMAX, -1 )

- 我们使用minMaxLoc()定位结果矩阵R中的最小值和最大值。

_minVal, _maxVal, minLoc, maxLoc = cv2.minMaxLoc(result, None)

- 对于前两种方法(TM_SQDIFF和MT_SQDIFF_NORMED),最佳匹配是最低值。对于所有其他的,更高的值表示更好的匹配。所以我们把对应的值保存在matchLoc变量中:

if (match_method == cv2.TM_SQDIFF or match_method == cv2.TM_SQDIFF_NORMED):

matchLoc = minLoc

else:

matchLoc = maxLoc- 显示源图像和结果矩阵。在最高可能的匹配区域周围绘制一个矩形:

cv2.rectangle(img_display, matchLoc, (matchLoc[0] + templ.shape[0], matchLoc[1] + templ.shape[1]), (0,0,0), 2, 8, 0 )

cv2.rectangle(result, matchLoc, (matchLoc[0] + templ.shape[0], matchLoc[1] + templ.shape[1]), (0,0,0), 2, 8, 0 )

cv2.imshow(image_window, img_display)

cv2.imshow(result_window, result)结果

- 使用输入图像测试我们的程序,如:

和模板图片:

- 生成以下结果矩阵(第一行是标准方法SQDIFF,CCORR和CCOEFF,第二行在其标准化版本中是相同的方法)。在第一列中,最黑暗的是更好的匹配,对于另外两列,位置越亮,匹配越高。

RESULT_0

Result_1

Result_2

Result_3

Result_4

Result_5

正确的匹配如下所示(黑色矩形在右边的家伙的脸上)。请注意,CCORR和CCDEFF给出了错误的最佳匹配,但是它们的正常版本是正确的,这可能是因为我们只考虑“最高匹配”,而不是其他可能的高匹配。